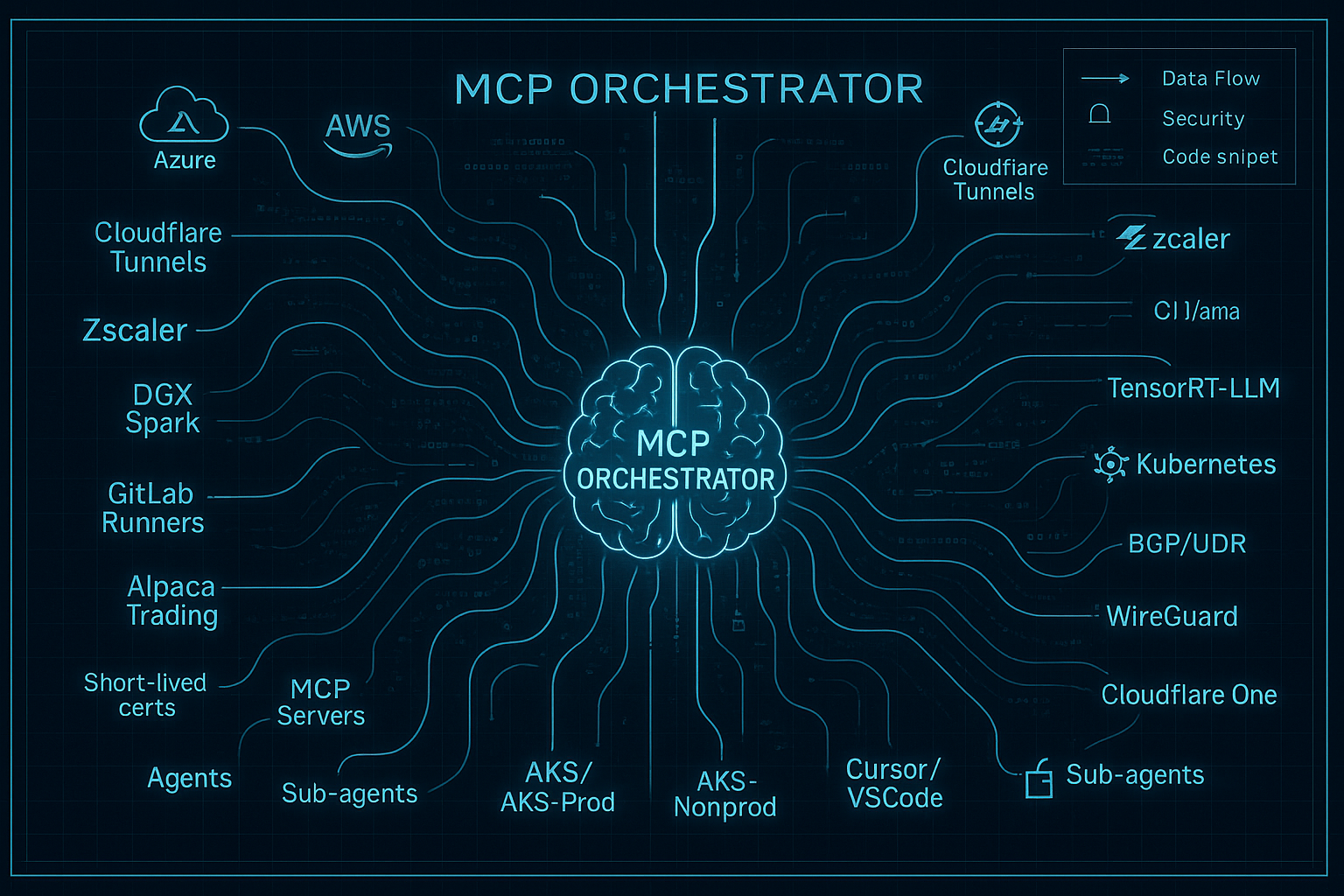

Unlocking AI Tentacles: Injecting Context Across Controlled Networks

How the Model Context Protocol turns your private networks into composable context streams that keep AI assistants grounded, auditable, and fast.

Unlocking AI Tentacles

We keep asking models for better answers, but the real unlock is giving them safe reach into the networks we already control. The Model Context Protocol (MCP) is the wiring harness that lets assistants extend “tentacles” into file systems, IDEs, documents, and operational tooling without bespoke glue code for each surface. When done right, MCP injection feels less like jailbreaking and more like installing a USB-C hub for intelligence.

MCP as the Injection Layer

Anthropic describes MCP as a way to turn isolated AI assistants into connected systems, replacing one-off connectors with a shared protocol that any client or server can implement. The official documentation reinforces the metaphor: MCP is “a USB-C port for AI applications,” standardizing how models tap into data sources, tools, and workflows so that context flows bidirectionally instead of living in prompt hacks.

That standardization matters when you own multiple network zones—developer laptops, production clusters, air-gapped enclaves—and need repeatable guardrails everywhere. By pairing a capable MCP client with domain-specific servers, you can push rich context into the model, stage actions, and verify outcomes without giving up control of the underlying infrastructure.

Field Notes from the MCP Ecosystem

DesktopCommanderMCP: Full-Fidelity Workspace Control

The wonderwhy-er/DesktopCommanderMCP project shows how much surface area a single MCP server can expose. It layers filesystem reads and writes, ripgrep search, surgical text edits, and even Python/Node/R REPL sessions on top of the baseline MCP filesystem server. Interactive process management, streaming command output, and audit logging turn the server into a remote ops console that still runs in your Docker container or workstation.

mcp-shell: Secure Actuation for Host Commands

sonirico/mcp-shell focuses on the actuator role—executing shell commands with discipline. It ships a Go-based server that enforces allowlists, regex block rules, output size limits, and resource caps, all while emitting structured JSON responses (stdout, stderr, exit codes, duration). Optional Docker isolation, unprivileged users, and YAML security profiles make it a solid drop-in for production shells where you need to log every invocation.

SoloFlow MCP: Document Intelligence with Guardrails

benyue1978/solo-flow-mcp leans into knowledge scaffolding. It watches a .soloflow/ directory, listing, reading, and updating artifacts with path isolation tied to a projectRoot. The headline feature is a library of 32 prompts spanning requirements, design, development, testing, and release. When an AI client connects, it inherits that structured playbook—perfect for keeping cross-network documentation synchronized without hand-authoring workflows for each environment.

Run Command for Cursor: IDE-Class Injection

On the client side, the Run Command extension (dxt.so/dxts/run-command-mcp) showcases how MCP servers plug into an IDE. Cursor layers a chat UX on top of shell execution so developers can ask for port scans, run templates, or inspect processes without leaving the editor. Under the hood it spins up benyue1978’s command MCP, proving that a single server can service both headless agents and human-in-the-loop assistants.

Awesome MCP Servers: An Expanding Toolchain Catalog

The punkpeye/awesome-mcp-servers index highlights how quickly the ecosystem is blooming—browser automation, cloud APIs, databases, observability stacks, even FHIR medical connectors. Treat it as the package registry for new tentacles: when you need Salesforce data or Kubernetes audits inside your private agent mesh, you likely just point an existing MCP server at the right credentials.

Designing Your Tentacle Mesh

graph LR

subgraph Local Ops Zone

DC[DesktopCommander MCP]

Shell[mcp-shell]

end

subgraph Knowledge Fabric

Solo[SoloFlow MCP]

end

subgraph Developer Edge

Cursor[Run Command MCP]

end

Client[Claude / Cursor / Custom Agent]

Vault[Secrets & Policy]

Logs[Audit Lake]

Client --> DC

Client --> Shell

Client --> Solo

Client --> Cursor

Shell --> Logs

DC --> Logs

Solo --> Logs

Cursor --> Logs

Vault -. policy/credentials .-> Shell

Vault -. policy/credentials .-> DC

Vault -. templates .-> SoloThis architecture keeps the AI client intentionally thin. Each MCP server owns a slice of capability and feeds context back into an audit lake. Policies and secrets live outside the model boundary so you can rotate credentials or narrow scopes without retraining anything.

Security, Observability, and Trust

- Command hygiene: mcp-shell’s allowlist/blocklist plus execution timeouts keep shell access from turning into root kits. Run it in Docker or chroot when bridging sensitive networks.

- File change provenance: DesktopCommander logs every tool call with timestamps and arguments, so you can replay how an agent mutated source or infrastructure files.

- Scoped knowledge: SoloFlow’s

projectRootisolation ensures documentation prompts never bleed into the wrong repository, even if the AI is juggling multiple engagements. - Human handshakes: Cursor’s Run Command channel keeps a developer in the loop, letting you stage automated suggestions before they hit production.

Wrap those controls with centralized telemetry—ship stdout/stderr, prompt history, and safety signals into your SIEM or data lake so you can trace every tentacle back to an accountable principal.

Implementation Playbook

- Map the surfaces. Inventory the networks and data planes you need to expose (source repos, build pipelines, compliance docs, ops tooling).

- Choose or build servers. Start with off-the-shelf connectors from the awesome list; extend with custom MCP servers when you need bespoke APIs or hardware.

- Harden the envelope. Apply mcp-shell style guardrails—least-privilege accounts, per-command allowlists, output quotas, and network namespaces.

- Enrich the prompts. Use SoloFlow-style playbooks or your own templates so every invocation begins with policy, context, and success criteria already loaded.

- Instrument everything. Mirror DesktopCommander’s audit logging: stream prompts, tool invocations, and results to observability stacks so you can investigate anomalies.

- Iterate on clients. Claude Desktop, Cursor, or custom agents can all drive the same servers. Pilot in lower-risk zones, validate behavior, then roll into production enclaves.

Looking Ahead

MCP gives us a principled way to grow AI “tentacles” without sacrificing network sovereignty. Each connector becomes a composable brick: attach a new data source, enforce policy centrally, and watch the model adapt instantly. As the ecosystem widens, the hard work shifts from writing glue code to designing the governance model that lets assistants roam confidently through your infrastructure.

The takeaway: treat MCP injection not as a jailbreak, but as a disciplined integration strategy. If you own the networks, you can let the model reach into them—one audited, policy-bound tentacle at a time.

Related Posts

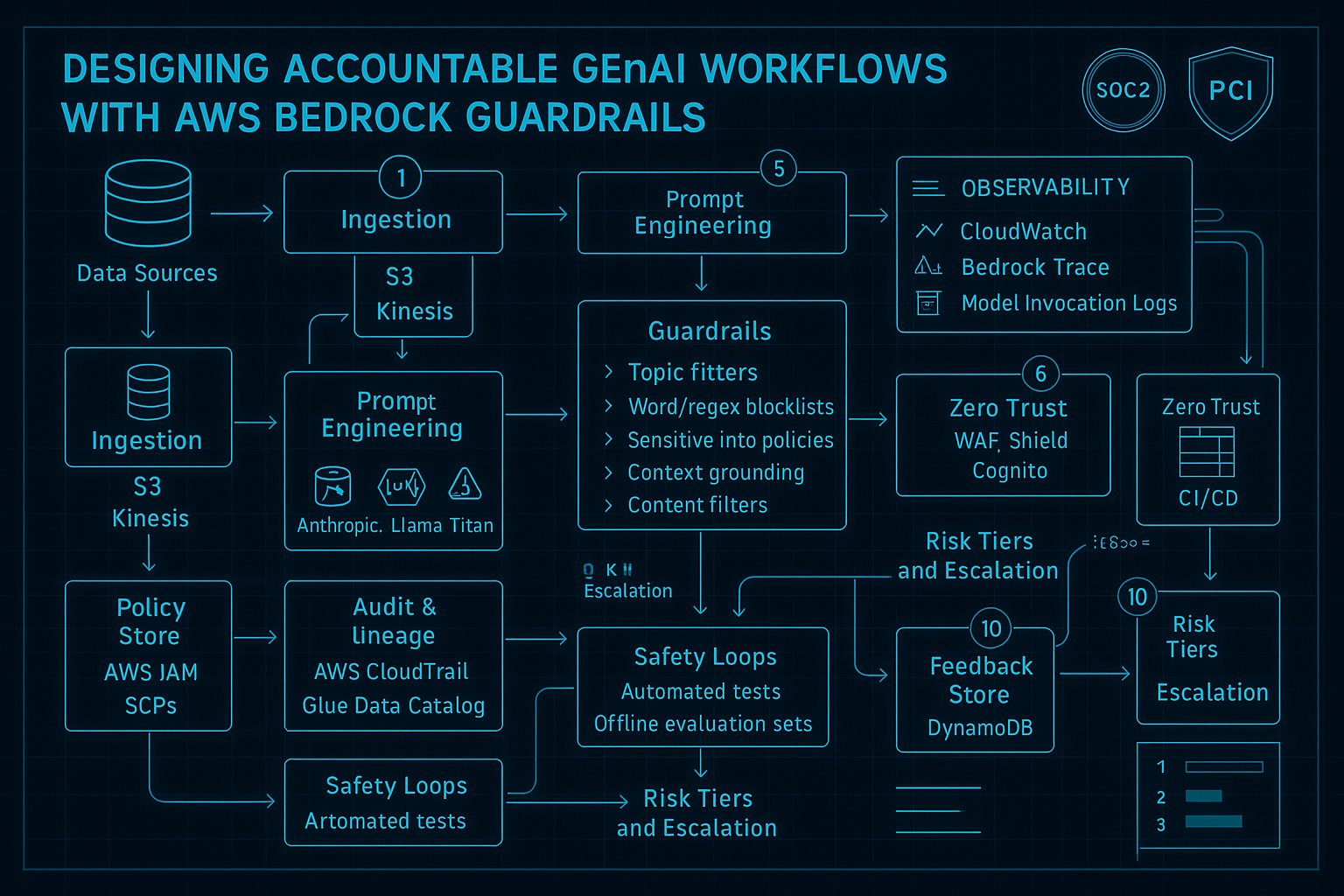

Designing Accountable GenAI Workflows with AWS Bedrock Guardrails

An operator's playbook for shipping responsible GenAI assistants on AWS by mixing Bedrock Guardrails, event-driven monitoring, and zero-trust platform controls.

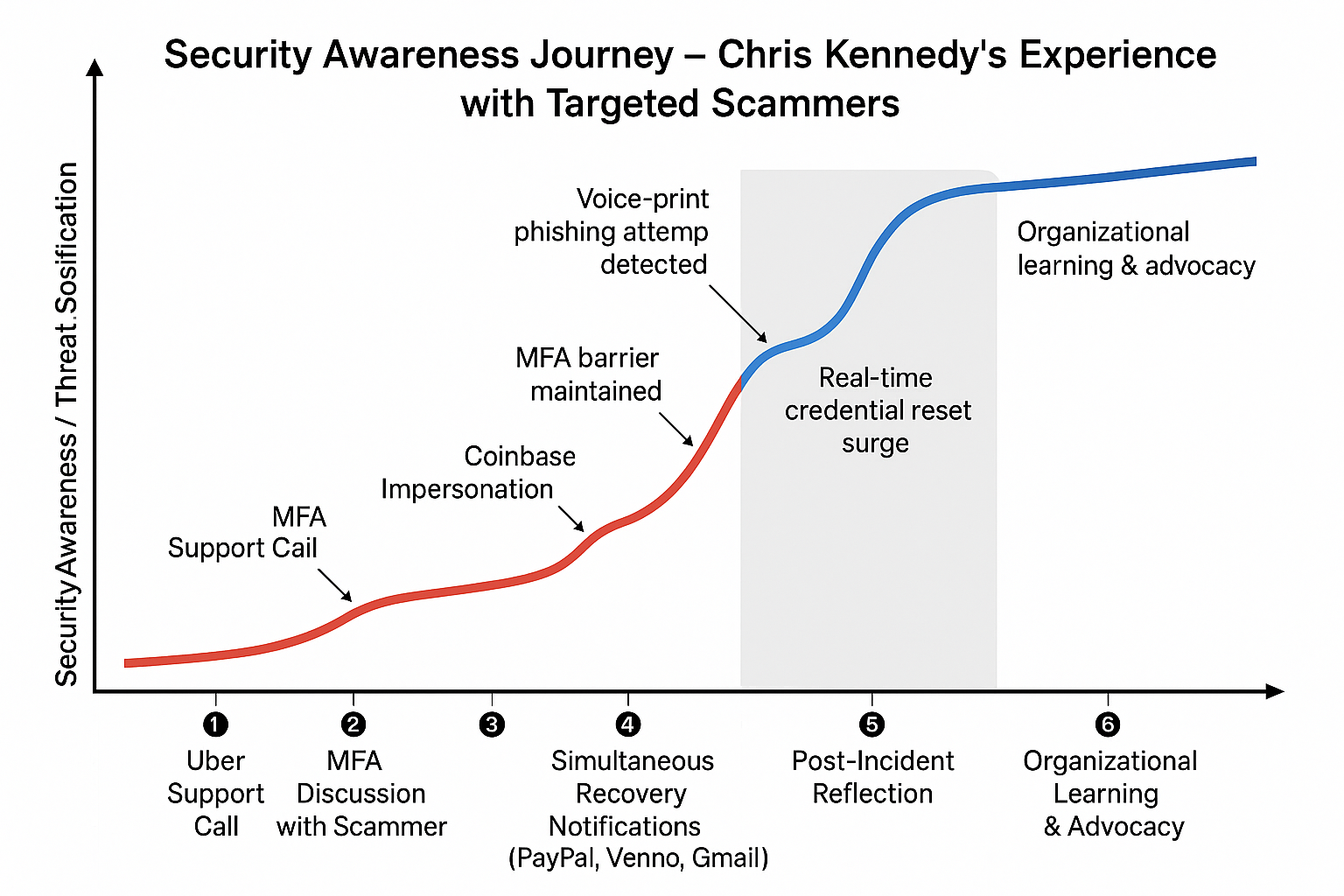

Security Awareness Journey — Learning from Chris Kennedy's Targeted Scammer Experience

A deep dive into the sophisticated social engineering attack that targeted security expert Chris Kennedy, and the critical lessons we can all learn about modern scammer tactics, MFA protection, and organizational security awareness.

AI Orchestration for Network Operations: Autonomous Infrastructure at Scale

How a single AI agent orchestrates AWS Global WAN infrastructure with autonomous decision-making, separation-of-powers governance, and 10-100x operational acceleration.

Comments & Discussion

Discussions are powered by GitHub. Sign in with your GitHub account to leave a comment.