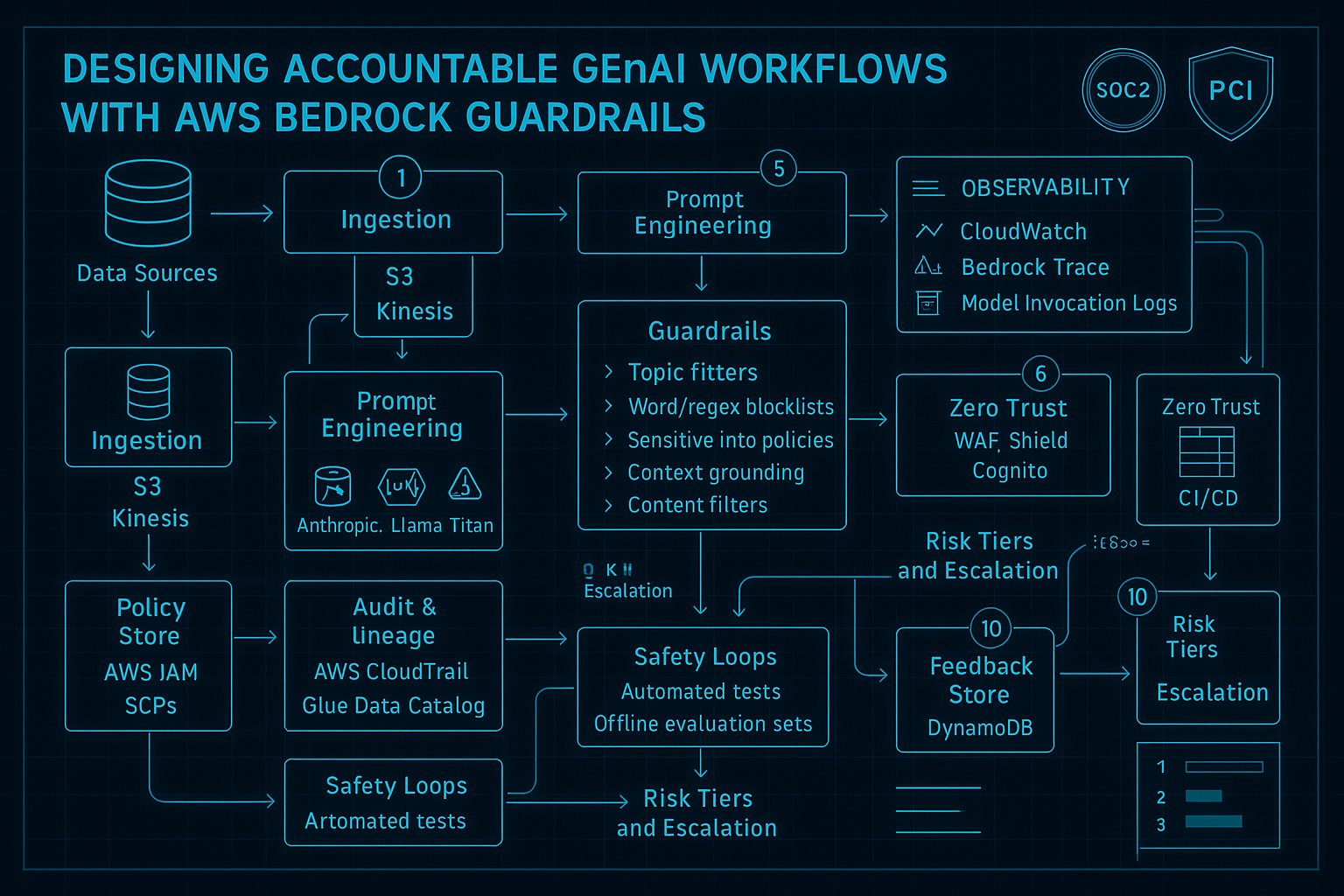

Designing Accountable GenAI Workflows with AWS Bedrock Guardrails

An operator's playbook for shipping responsible GenAI assistants on AWS by mixing Bedrock Guardrails, event-driven monitoring, and zero-trust platform controls.

Designing Accountable GenAI Workflows with AWS Bedrock Guardrails

Teams are racing to ship generative AI assistants for operations, support, and self-service automation. Yet the controls we take for granted in traditional application stacks—change management, observability, and least-privilege—are often missing once a model reaches production. In regulated environments, that gap blocks adoption as surely as latency or cost.

This guide breaks down how I build a defensible, audit-ready GenAI workflow on AWS using Bedrock Guardrails, AWS-native telemetry, and the same governance patterns that already protect the rest of the platform.

Four Guardrail Pillars

Content Safety & Moderation

Flag or block disallowed topics, PII, or brand-sensitive phrases before the request reaches the foundation model.

Prompt Hardening

Layer templated system prompts with dynamic policy controls to stop jailbreaks and role escalation.

Execution Boundaries

Restrict what downstream tools and automations the assistant may call, and scope those actions to least privilege IAM roles.

Continuous Verification

Stream every prompt/response pair into an immutable timeline so we can investigate drift, bias, or misuse.

🔗 Technology Mapping: These pillars map cleanly to Bedrock Guardrails, Amazon CloudWatch, AWS IAM, and Amazon OpenSearch Service.

Reference Architecture

sequenceDiagram

participant Client as Client App

participant Guardrail as Bedrock Guardrail

participant Model as FM (Claude 3 Sonnet)

participant Orchestrator as Step Functions Orchestrator

participant Tools as Controlled Toolchain

participant Audit as Audit Lake

Client->>Guardrail: Prompt + metadata

Guardrail-->>Client: Blocked? (policy violation)

Guardrail->>Model: Sanitized prompt

Model-->>Guardrail: Raw response

Guardrail->>Orchestrator: Response + safety signals

Orchestrator->>Tools: Conditional tool invocations (IAM-scoped)

Orchestrator->>Audit: Emit prompt/response/tool usage events

Tools-->>Orchestrator: Results

Orchestrator-->>Client: Final answer + referencesKey callouts:

- Guardrails run pre- and post-model, catching PII or policy breaches and attaching metadata for observability.

- The orchestration layer (I like Step Functions Express Workflows) decides which tools can run based on safety context and user entitlements.

- Every interaction is factored through an audit lake built with Kinesis Firehose → Amazon S3 → OpenSearch dashboards.

Implementing Bedrock Guardrails

import { BedrockAgentRuntimeClient, GuardrailEnforceCommand } from "@aws-sdk/client-bedrock-agent-runtime";

const runtime = new BedrockAgentRuntimeClient({ region: "us-east-1" });

export async function invokeWithGuardrail({ prompt, sessionId, userId }) {

const guardrailId = process.env.GUARDRAIL_ID!;

const modelArn = process.env.MODEL_ARN!;

const response = await runtime.send(

new GuardrailEnforceCommand({

guardrailIdentifier: guardrailId,

sessionId,

modelArn,

inputText: prompt,

metadata: {

userId,

channel: "SupportConsole",

piiHandling: "mask"

}

})

);

if (response.action === "BLOCKED") {

return {

blocked: true,

reason: response.blockingViolations?.map(v => v.category).join(", ") ?? "policy_violation"

};

}

return {

blocked: false,

output: response.outputText,

annotations: response.safetyAnnotations

};

}Tip: version your guardrails with Git-backed infrastructure-as-code. I use AWS CDK to codify sensitive topic lists, contextual prompts, and PII handling rules.

Governance Patterns That Scale

- Least Privilege IAM Roles — Provision a dedicated execution role per tool (Service Catalog, Systems Manager, Jira automations) and let Step Functions assume those roles case-by-case. No assistant gets administrator credentials.

- Signed References — Force every generated answer to cite a document or API call. If no factual grounding exists, return a graceful fallback instead of hallucinating.

- Explainability for Auditors — Stream Guardrail

safetyAnnotationsand Step FunctionsExecutionHistoryinto an append-only S3 bucket with Glacier Deep Archive policies. Pair that feed with Amazon Detective for investigations. - Policy as Code — Manage everything—Guardrail configs, tool catalogs, orchestration graphs—in the same repo and review flow as the rest of the platform.

Observability & Alerting

- Prompts & Responses — Capture a structured schema (

prompt_id,user,model,guardrail_version,tool_calls[]) and ship it to OpenSearch for searchability. - Safety Metrics — Emit CloudWatch metrics for

BlockedRequests,JailbreakAttempts, and custom business rules (PaymentWorkflowDenied). Wire alarms to PagerDuty. - Cost Controls — Use AWS Budgets and Fargate task quotas to shut down runaway usage.

- Replay Harness — Build a Step Functions state machine that replays archived prompts through new guardrail versions before promoting them.

Rollout Checklist

- Guardrail baseline deployed (PII masking, topic filters, prompt grounding).

- Step Functions orchestration wired to Bedrock Runtime with per-tool IAM roles.

- Observability pipeline (Firehose → S3 → OpenSearch) producing traces within 5 minutes.

- FinOps alarms tuned for request volume and latency.

- Security team has access to audit dashboards and replay harness.

Closing Thoughts

GenAI isn’t exempt from the controls that keep the rest of your AWS estate compliant. The combination of Bedrock Guardrails, event-driven orchestration, and least-privilege tool access lets platform teams move fast and maintain defensible posture.

If you want to pair on an implementation, or need a second set of eyes on your governance model, reach out via LinkedIn.

Filed under: building AI assistants that ship safely and stay audit-ready from day one.

Related Posts

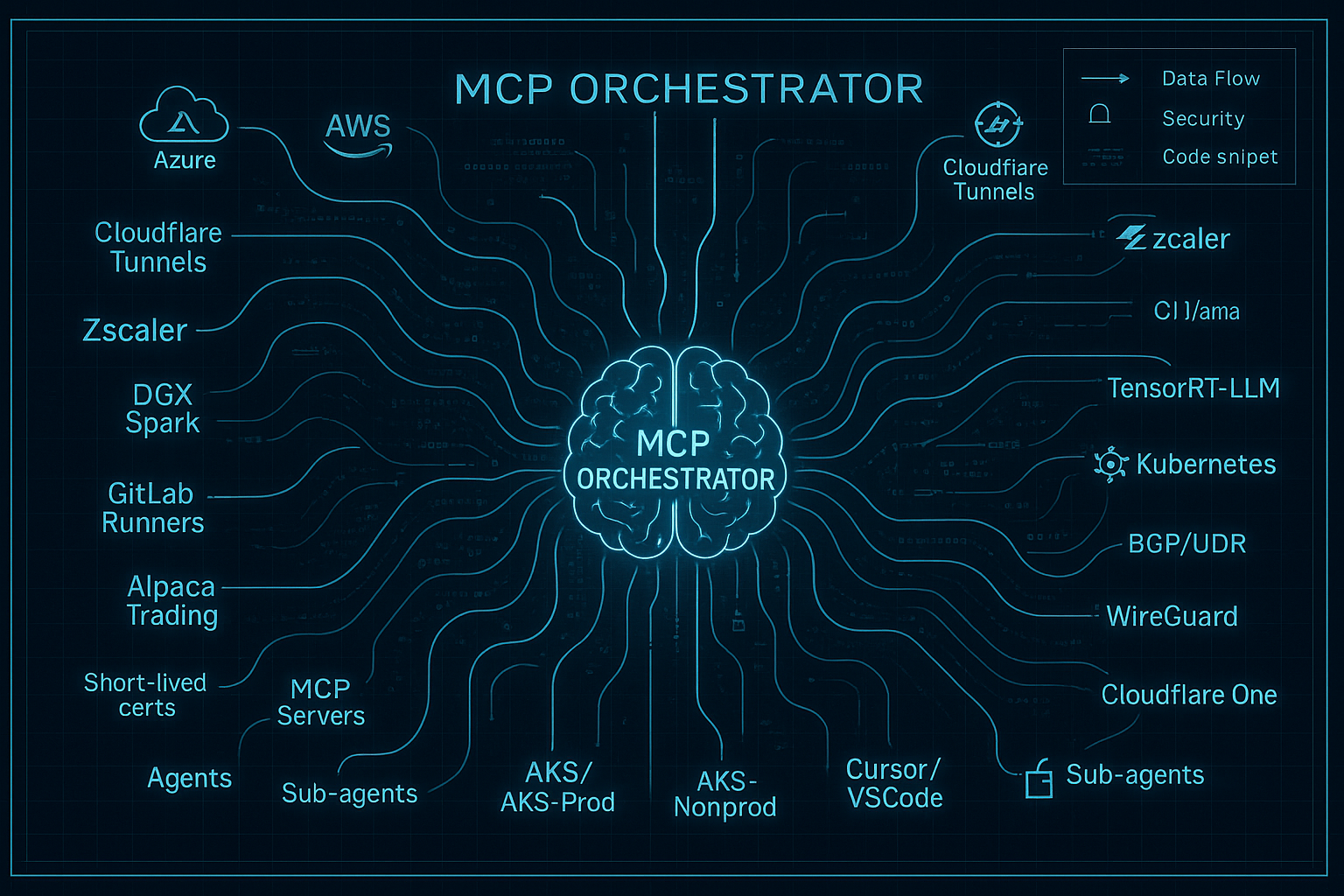

Unlocking AI Tentacles: Injecting Context Across Controlled Networks

How the Model Context Protocol turns your private networks into composable context streams that keep AI assistants grounded, auditable, and fast.

The Audit Agent: Building Trust in Autonomous AI Infrastructure

How an independent audit agent creates separation of powers for AI-driven infrastructure—preventing runaway automation while enabling autonomous operations at scale.

AI Orchestration for Network Operations: Autonomous Infrastructure at Scale

How a single AI agent orchestrates AWS Global WAN infrastructure with autonomous decision-making, separation-of-powers governance, and 10-100x operational acceleration.

Comments & Discussion

Discussions are powered by GitHub. Sign in with your GitHub account to leave a comment.