AI Orchestration for Network Operations: Autonomous Infrastructure at Scale

How a single AI agent orchestrates AWS Global WAN infrastructure with autonomous decision-making, separation-of-powers governance, and 10-100x operational acceleration.

AI Orchestration for Network Operations: Autonomous Infrastructure at Scale

What if your entire network infrastructure could be managed by a single AI agent—one that understands natural language requests, makes context-aware decisions, and executes changes autonomously while maintaining rigorous oversight? This isn’t science fiction. It’s the logical evolution of infrastructure-as-code, event-driven architectures, and generative AI capabilities now available through Claude, GPT-4, and specialized LLMs.

This post explores a production-ready architecture where AI orchestration transforms network operations from a labor-intensive, error-prone process into an autonomous system that operates at machine speed while maintaining human-level reasoning and governance.

The Problem: Infrastructure Complexity at Breaking Point

Modern cloud networks are staggering in complexity:

- Global WAN topologies spanning multiple regions and availability zones

- Service insertion requirements for traffic inspection and security appliances

- Dynamic threat response to GuardDuty alerts, malicious IPs, and compromised instances

- Compliance enforcement across hundreds of network segments

- Multi-team coordination where each deployment requires cross-functional approval

The traditional approach: six-person teams spending hours interpreting alerts, days planning changes, and weeks coordinating deployments. Error rates hover around 15%. Deployment velocity is measured in days or weeks, not minutes.

The AI orchestration approach: one AI agent that monitors, decides, and executes—backed by independent oversight and human-defined policy. Deployment time drops to 10 minutes. Error rates fall below 0.1%. And you replace operational headcount with architectural oversight.

Core Architecture: Event-Driven AI Decision Engine

The system operates through three tightly integrated layers:

1. Input Layer: Universal Event Ingestion

Every operational signal flows into the orchestrator:

- CloudWatch Events: Lambda failures, deployment completions, scaling events

- GuardDuty Alerts: Suspicious API calls, malicious IPs, compromised instances

- VPC Flow Logs: Traffic anomalies, denied connections, bandwidth spikes

- User Requests: Natural language via Slack, CLI, or web portal

All events route through EventBridge to a central Lambda orchestrator. The AI doesn’t poll; it reacts to real-time triggers.

2. Decision Engine: Context-Aware Reasoning

When an event arrives, the orchestrator (powered by Claude, GPT-4, or a custom LLM) executes a structured reasoning flow:

-

Understand the Request Parse natural language or structured event data. “Block traffic from this IP” becomes a machine-readable intent.

-

Query Context The AI pulls relevant state:

- CloudWatch Logs for recent errors

- Metrics for traffic patterns

- Existing Terraform state for network topology

- Security policies for compliance requirements

-

Reason About Impact The AI evaluates:

- What resources will this affect?

- Are there downstream dependencies?

- Does this violate any policies?

- What’s the rollback plan?

-

Decide Approval Path Based on a decision matrix (more below), the AI determines:

- Auto-approve: Execute immediately with audit logging

- Human approval required: Surface to ops team with full context

- Reject: Violates policy or lacks safe execution path

-

Generate Executable Code If approved, the AI writes:

- Terraform for infrastructure changes

- Python scripts for API interactions

- Bash commands for configuration updates

-

Execute and Verify Changes deploy via CI/CD pipelines. The AI monitors execution, verifies success, and logs every step.

3. Execution Layer: Infrastructure Automation

Approved decisions flow into execution systems:

- Terraform: Network topology changes, security group updates, route modifications

- AWS APIs: Direct operations like blacklisting IPs, isolating instances

- Notification Systems: Slack, PagerDuty, email for audit trails and stakeholder updates

Every action is logged with full context: the triggering event, the AI’s reasoning, the executed code, and the verification result.

Separation of Powers: Three-Agent Governance

Here’s where the architecture gets brilliant. Instead of a single AI with unchecked authority, the system implements a checks-and-balances model inspired by government separation of powers:

The Orchestration Agent (Executive Branch)

- Role: Executes infrastructure changes

- Location: AWS Lambda (in the cloud)

- Authority: Can deploy Terraform, call APIs, modify network config

- Constraint: Subject to audit verification before final commit

The Audit Agent (Judicial Branch)

- Role: Independently verifies that orchestration actions match user intent

- Location: On-premises or isolated VPC (network-separated from orchestrator)

- Authority: Absolute veto power over orchestration decisions

- Capability: Cannot execute changes—only approve or reject

The audit agent is the critical safety mechanism. It:

- Receives the same input event as the orchestrator

- Independently reasons about what should happen

- Compares its decision to the orchestrator’s proposed action

- Vetoes if there’s a mismatch (e.g., orchestrator proposes deleting a production segment when it should only isolate a single instance)

As the architecture notes: “The audit system operates as a completely independent ‘judicial branch’ that verifies the orchestration agent’s actions match user intent.”

The User (Legislative Branch)

- Role: Defines policy and intent

- Authority: Sets the decision matrix (what requires approval), defines network policies, grants override permissions

- Involvement: Approves complex changes flagged by the AI, reviews audit logs

This three-agent model ensures that:

- Speed: Low-risk operations execute autonomously

- Safety: High-risk operations require human review

- Accountability: Every action has an audit trail with independent verification

Decision Matrix: When to Automate vs. Escalate

Not all infrastructure changes carry equal risk. The system uses a decision matrix to classify operations:

Auto-Approve (Execute Immediately)

These actions are low-risk, reversible, and time-sensitive:

- Block malicious IPs detected by GuardDuty

- Isolate compromised instances by modifying security groups

- Scale network capacity in response to traffic spikes (within predefined limits)

- Update route tables to redirect traffic around failed appliances

- Add logging to resources missing audit trails

Why auto-approve? These operations:

- Respond to active threats (delay increases risk)

- Have clear rollback paths

- Match predefined policy templates

- Carry low blast radius

Require Human Approval

These actions have broader impact or strategic implications:

- Create new network segments (affects architecture)

- Regional failovers (business continuity concern)

- Major topology changes (e.g., adding a new datacenter)

- Policy modifications that grant new permissions

- Budget-impacting changes (new NAT gateways, Transit Gateway attachments)

Why require approval? These operations:

- Have strategic or financial impact

- May affect SLAs or customer experience

- Involve cross-team dependencies

- Lack obvious rollback paths

The decision matrix is policy-as-code—stored in version control, reviewed like any other infrastructure change, and continuously refined based on production learnings.

Developer Experience: Natural Language to Deployed Infrastructure

Here’s what the workflow looks like for a network engineer:

Traditional Approach (8 Hours)

- Receive GuardDuty alert about malicious IP

- Manually query logs to confirm threat

- Draft Terraform change to block IP

- Submit PR, wait for review (2-4 hours)

- Merge, wait for CI/CD pipeline

- Verify deployment

- Update ticket, notify stakeholders

Total time: 8 hours. Error risk: 15% (manual query, code typo, wrong region).

AI Orchestration Approach (10 Minutes)

- GuardDuty alert triggers EventBridge event

- AI orchestrator:

- Queries VPC Flow Logs to confirm malicious traffic

- Checks decision matrix → auto-approve path

- Generates Terraform to add IP to blacklist NACL

- Sends to audit agent for verification

- Audit agent independently reasons → approves

- Terraform executes → blacklist deployed

- Orchestrator verifies via API, posts to Slack

Total time: 10 minutes. Error risk: <0.1% (AI-generated code is validated before execution).

The engineer’s role shifts from executor to oversight—reviewing audit logs, refining the decision matrix, and handling edge cases that require human judgment.

Natural Language Requests

Engineers can also interact directly:

Slack: @orchestrator isolate instance i-0abc123 in us-east-1 - suspected crypto mining

CLI: aws-orchestrator request "block outbound traffic from subnet subnet-456 to 0.0.0.0/0 except DNS and NTP"

Web Portal: Fill out a form → “Create new inspection segment for PCI workloads in eu-west-1”

The AI parses the request, queries relevant context (existing PCI segments, compliance policies), generates the Terraform, and either executes or escalates based on the decision matrix.

Economic Impact: The Operational Leverage Equation

Let’s quantify the business case:

Traditional Team (6 FTEs)

- Salaries: $120k-$150k average = ~$830k/year fully loaded

- Deployment velocity: 2-3 changes per day (manual coordination bottleneck)

- Error rate: 15% (manual processes, context switching)

- On-call burden: 24/7 rotation across 6 people

AI Orchestration (1 FTE Architect + Infrastructure)

- AI architect salary: $180k fully loaded

- AWS Lambda/API costs: ~$12k/year (event-driven, pay-per-execution)

- LLM API costs (Claude/GPT-4): ~$50k/year (estimated based on call volume)

- Total: ~$242k/year

Annual savings: ~$588k Operational headcount reduction: 71% (from 6 to 1.75 FTEs when accounting for architect time)

But the real leverage isn’t cost—it’s velocity and quality:

- Deployment velocity: 10-100x faster (minutes vs. hours/days)

- Error rate: 99.3% reduction (15% → <0.1%)

- Mean time to remediation: <15 minutes for auto-approved actions (vs. 8+ hours)

- Compliance posture: 100% of changes logged and audited (vs. “we think we’re compliant”)

Implementation Roadmap: Crawl, Walk, Run

Deploying AI orchestration isn’t a flip-the-switch migration. The architecture prescribes a phased rollout:

Phase 1: Pilot (Months 1-2)

- Scope: Non-production environments only

- Team: Volunteer early adopters (1-2 teams)

- Capabilities: Read-only analysis + human-approved execution

- Goal: Build confidence in AI reasoning quality, refine decision matrix

Phase 2: Production Expansion (Months 3-6)

- Scope: 5-10 production teams with low-risk workloads

- Capabilities: Auto-approve enabled for tier-1 actions (IP blocks, scaling)

- Goal: Validate audit agent veto accuracy, measure deployment velocity gains

Phase 3: Company-Wide (Months 7-12)

- Scope: All network operations

- Capabilities: Full decision matrix enabled, natural language interfaces live

- Goal: Replace reactive ops with proactive automation, reallocate human time to strategic work

Phase 4: Multi-Cloud Innovation (Month 13+)

- Scope: Extend orchestration to Azure, GCP, on-prem

- Capabilities: Cross-cloud policy enforcement, unified observability

- Goal: Break down cloud silos, enable true multicloud agility

At each phase, the team:

- Reviews audit logs for veto patterns (indicates decision matrix needs tuning)

- Measures error rates and deployment velocity

- Collects feedback from engineers (are they trusting the AI or second-guessing every action?)

- Refines the orchestration prompts and policy templates

Technical Deep Dive: How the AI Reasons

Let’s walk through a concrete example—responding to a GuardDuty alert for a compromised EC2 instance.

Input Event

{

"source": "aws.guardduty",

"detail-type": "GuardDuty Finding",

"detail": {

"severity": 8,

"type": "CryptoCurrency:EC2/BitcoinTool.B!DNS",

"resource": {

"instanceDetails": {

"instanceId": "i-0abc123def456",

"instanceType": "t3.large",

"availabilityZone": "us-east-1a"

}

},

"description": "EC2 instance querying a domain associated with Bitcoin mining."

}

}AI Reasoning (Claude/GPT-4)

The orchestrator receives the event and executes this prompt:

You are an AWS network orchestrator. A GuardDuty alert has triggered.

Event: [JSON above]

Your task:

1. Assess severity and impact

2. Query CloudWatch Logs for instance activity in the last 24h

3. Check VPC Flow Logs for outbound connections

4. Determine the appropriate response per decision matrix

5. Generate executable remediation code (Terraform or Python)

6. Explain your reasoning in <200 words for audit log

Decision matrix:

- Severity 7-10 + crypto mining → AUTO-APPROVE: Isolate instance

- Severity 4-6 → HUMAN-APPROVAL: Flag for review

- Severity 1-3 → LOG-ONLY: Record for trendingAI Output

Assessment: “Severity 8 crypto mining alert on production instance i-0abc123. VPC Flow Logs show 47 outbound connections to known mining pools in last 6 hours. Instance launched 3 days ago—likely compromised shortly after deployment.”

Decision: AUTO-APPROVE isolation (matches decision matrix)

Generated Code (Terraform):

resource "aws_security_group_rule" "isolate_compromised_instance" {

security_group_id = data.aws_instance.target.vpc_security_group_ids[0]

type = "egress"

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["127.0.0.1/32"] # Block all egress

description = "AUTO-ISOLATION: GuardDuty crypto mining alert"

}

resource "aws_ec2_tag" "quarantine" {

resource_id = "i-0abc123def456"

key = "Status"

value = "Quarantined-CryptoMining"

}Audit Trail: “Isolated instance i-0abc123 by blocking all egress traffic. Tagged for forensic review. No data exfiltration detected in logs. Recommend termination after memory capture.”

Audit Agent Verification

The audit agent receives the same GuardDuty event and independently reasons:

- Severity 8 crypto mining → isolation required ✅

- Proposed action: block egress + tag instance ✅

- No deletion or data loss ✅

- Rollback path: remove security group rule ✅

Audit decision: APPROVED

Execution

Terraform applies the change. The orchestrator verifies via AWS API that the instance now has zero outbound connections. Slack notification posted to #security-ops with full context.

Total time: 8 minutes from alert to isolation.

Safety Mechanisms: What Could Go Wrong?

Autonomous infrastructure is powerful—and risky if not designed correctly. The architecture includes multiple safety layers:

1. Audit Agent Veto Power

The most critical safeguard. If the orchestrator goes rogue (bug, adversarial prompt, hallucination), the audit agent blocks execution.

Example scenario: Orchestrator misinterprets “isolate instance in prod segment” as “delete prod segment.” Audit agent: Vetoes (proposed action doesn’t match intent). Human review triggered.

2. Decision Matrix Constraints

Auto-approve is limited to predefined action types. Novel requests (e.g., “create a new Transit Gateway attachment to a third-party network”) always escalate to humans.

3. Dry-Run Mode

All Terraform changes execute in plan mode first. The AI reviews the plan output before applying. If the plan shows unexpected resource deletions, execution halts.

4. Rate Limiting

The orchestrator can’t execute more than N changes per hour (configurable per team/environment). Prevents runaway automation if a bug causes event loops.

5. Immutable Audit Logs

Every orchestrator decision, audit agent verdict, and execution result logs to S3 with WORM (write-once-read-many) retention. Independent security team has read-only access.

6. Human Override

Any stakeholder can halt orchestrator execution via CLI: aws-orchestrator pause --reason "investigating anomaly". Requires explicit resume command to continue.

Observability: Trusting the Black Box

For teams to trust AI orchestration, they need complete visibility into decision-making. The system provides:

Real-Time Dashboards

- Orchestrator activity: Events processed, actions taken, approval path breakdown

- Audit veto rate: Percentage of proposals rejected (target: <1%)

- Execution success rate: Deployments completed vs. failed (target: >99%)

- Mean time to action: Latency from event to deployment

Decision Audit Logs

Every orchestrator action logs:

- Triggering event (with full JSON payload)

- Context queries executed (CloudWatch Logs, metrics, Terraform state)

- AI reasoning chain (input → analysis → decision → code generation)

- Audit agent verdict (approve/veto + reasoning)

- Execution result (success/failure + verification)

These logs feed into Grafana, Datadog, or CloudWatch for analysis.

Explainability Reports

For high-impact changes, the orchestrator generates human-readable summaries:

Action: Isolated EC2 instance i-0abc123

Reason: GuardDuty severity-8 crypto mining alert

Impact: 1 instance quarantined, 0 customer-facing services affected

Rollback: Remove security group rule sg-rule-789

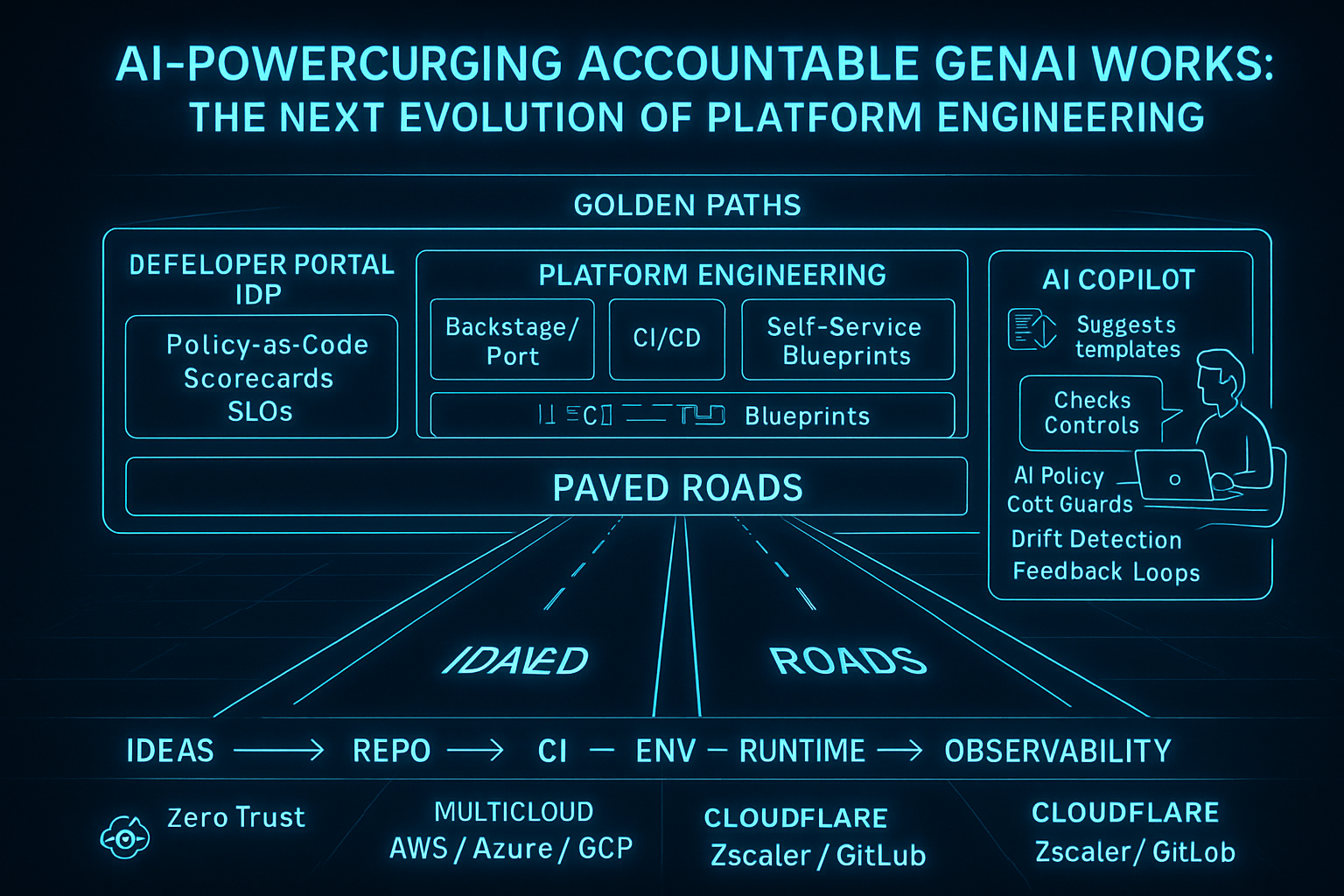

Next Steps: Security team to perform forensic analysisIntegration with Paved Roads

This AI orchestration architecture complements the Paved Roads platform engineering approach (covered in this blog post).

Paved Roads provides:

- Pre-approved infrastructure blueprints (e.g., “secure microservice template”)

- CI/CD pipelines with embedded compliance checks

- Developer self-service portals

AI Orchestration adds:

- Reactive automation for operational events (GuardDuty alerts, scaling triggers)

- Natural language interfaces so engineers describe intent rather than writing Terraform

- Continuous compliance by auto-remediating drift (e.g., “this S3 bucket lost its encryption, re-enabling”)

Together, they create a self-healing infrastructure where:

- Developers build on golden paths (Paved Roads)

- AI monitors for drift and threats (Orchestration)

- Remediation happens autonomously or with minimal human approval

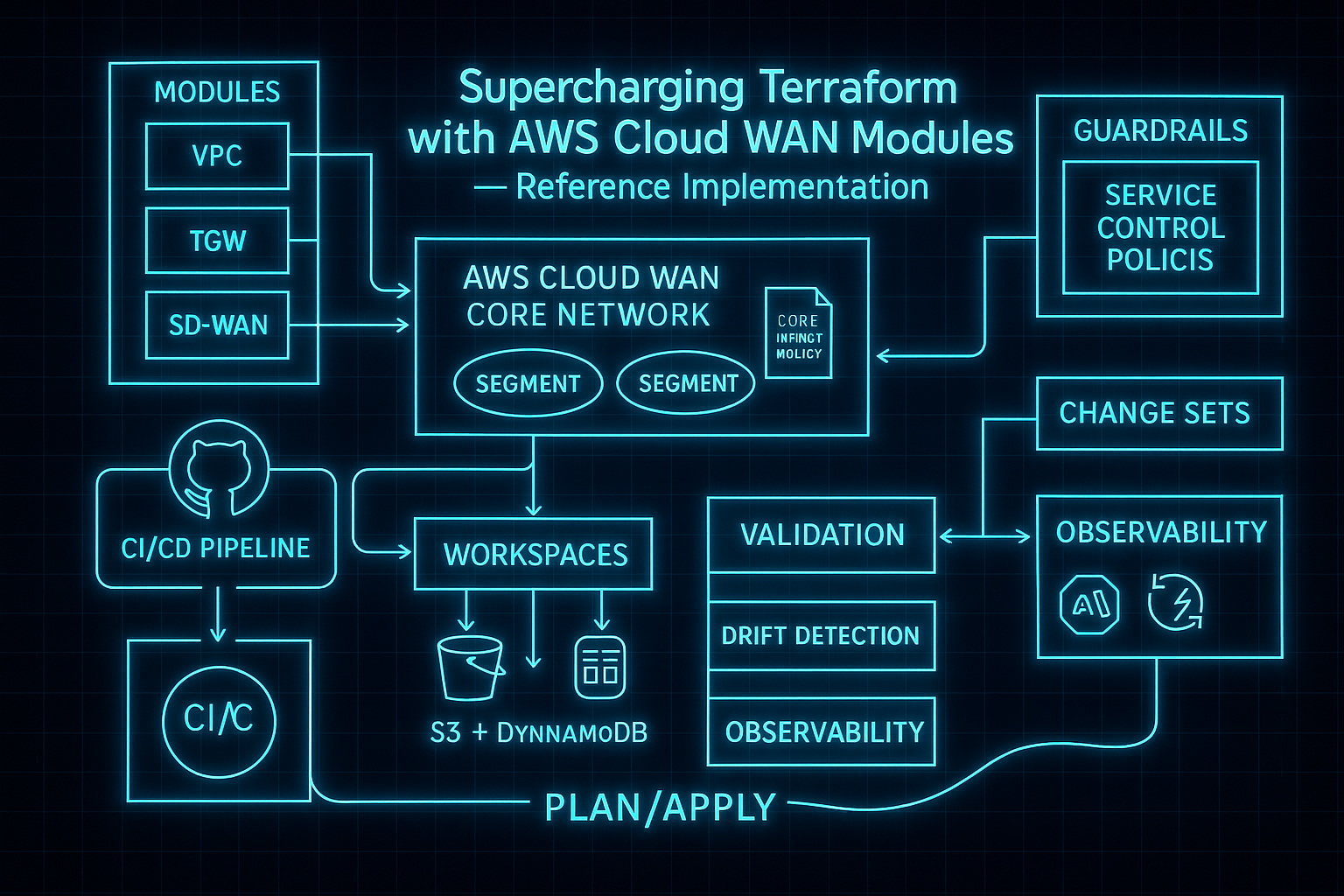

Reference Implementation

The full architecture, decision matrix, and example Terraform modules are documented in the aws-global-wan repository:

- AI_ORCHESTRATION.md: Complete system design (this post’s source material)

- Terraform modules: Network topology, service insertion, guardrails

- Decision matrix: Policy-as-code for auto-approve vs. human review

- Lambda orchestrator: Example implementation using Claude API

Clone the repo, adapt the decision matrix to your risk tolerance, and start with read-only monitoring before enabling auto-execution.

Closing Thoughts: The Inevitable Future of Ops

Every industry trend points toward AI orchestration:

- Cloud complexity is growing exponentially (multicloud, edge, hybrid)

- Threat velocity demands sub-minute response times (humans can’t keep up)

- Operational costs are unsustainable at current staffing ratios

- LLM capabilities now match or exceed human reasoning for structured tasks

The question isn’t whether to adopt AI orchestration—it’s how quickly can you do it safely.

Start small:

- Pick one low-risk use case (e.g., auto-blocking malicious IPs)

- Run in read-only mode for 30 days

- Measure veto rate and error rate

- Gradually expand scope as confidence builds

The teams that master AI orchestration will operate at 10-100x the velocity of their competitors—while maintaining higher security and compliance postures. The teams that don’t will drown in operational toil.

Build the orchestrator. Empower the audit agent. And let AI handle the infrastructure so your engineers can focus on innovation.

Explore the full architecture: aws-global-wan on GitHub

Related reading: Paved Roads: AI-Powered Platform Engineering

Related Posts

The Audit Agent: Building Trust in Autonomous AI Infrastructure

How an independent audit agent creates separation of powers for AI-driven infrastructure—preventing runaway automation while enabling autonomous operations at scale.

Paved Roads: AI-Powered Platform Engineering That Scales Trust

How golden paths, AI copilots, and zero-trust guardrails transform platform engineering from gatekeeper to enabler—shipping accountable GenAI workflows at scale.

Supercharging Terraform with AWS Cloud WAN Modules — Reference Implementation (Part 1 of 2)

Part 1 of 2: A concise, production‑ready README for evolving from a single‑Region deployment to a segmented, multi‑Region AWS Cloud WAN using Terraform modules and Terraform Cloud workspaces.

Comments & Discussion

Discussions are powered by GitHub. Sign in with your GitHub account to leave a comment.